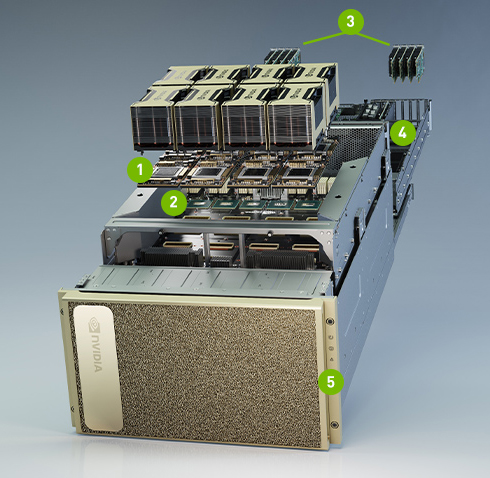

EXPLORE THE POWERFUL COMPONENTS OF

DGX A100

01.

8X NVIDIA A100 GPUS WITH 320 GB TOTAL GPU MEMORY

12 NVLinks/GPU, 600 GB/s GPU-to-GPU Bi-directonal Bandwidth

02.

6X NVIDIA NVSWITCHES

4.8 TB/s Bi-directional Bandwidth, 2X More than Previous Generation NVSwitch

03.

9x MELLANOX CONNECTX-6 200Gb/S NETWORK INTERFACE

450 GB/s Peak Bi-directional Bandwidth

04.

DUAL 64-CORE AMD CPUs AND 1 TB SYSTEM MEMORY

3.2X More Cores to Power the Most Intensive AI Jobs

05.

15 TB GEN4 NVME SSD

25GB/s Peak Bandwidth, 2X Faster than Gen3 NVME SSDs

For all the specifications

THE TECHNOLOGY INSIDE NVIDIA DGX A100

Major deep learning frameworks pre-installed

ESSENTIAL BUILDING BLOCK OF THE AI DATA CENTER

GAME CHANGING PERFORMANCE

Analytics

PageRank

Graph Edges per Second (Billions)

3,000X CPU Servers vs. 4X DGX A100. Published Common Crawl Data Set: 128B Edges, 2.6TB Graph.

Training

NLP: BERT-Large

Sequences per Second

BERT Pre-Training Throughput using PyTorch including (2/3)Phase 1 and (1/3)Phase 2. Phase 1 Seq Len = 128, Phase 2 Seq Len = 512. V100: DGX-1 with 8X V100 using FP32 precision. DGX A100: DGX A100 with 8X A100 using TF32 precision.

Inference

Peak Compute

TeraOPS per Second

CPU Server: 2X Intel Platinum 8280 using INT8. DGX A100: DGX A100 with 8X A100 using INT8 with Structural Sparsity.